Introduction

Regularization is a term that is often used in the world of machine learning, but what exactly does it mean? In simple terms, regularization is a technique used to prevent overfitting in machine learning models. Overfitting occurs when a model performs well on the data it was trained on, but fails to generalize to new data. This can lead to poor performance and inaccurate predictions. In this blog post, we will dive deeper into what is regularization in machine learning and how it helps improve the performance and generalization of models.

Image by Canva

Understanding the Basics of Regularization

Regularization is a crucial concept in machine learning that helps prevent overfitting. But to truly understand regularization, we need to grasp its basic principles. At its core, regularization is a technique that introduces additional constraints to a machine learning model during the training process. These constraints are meant to discourage the model from fitting the training data too closely, in turn promoting better generalization to new, unseen data.

One commonly used regularization method is called L2 regularization, also known as ridge regression. L2 regularization adds a penalty term to the loss function of the model, which acts to shrink the coefficients of the features towards zero. This helps control the model’s complexity and reduces the chances of overfitting.

Another popular technique is L1 regularization, also known as Lasso regression, which adds a penalty term based on the absolute value of the coefficients. This has the effect of not only reducing the complexity of the model but also performing feature selection by forcing some coefficients to become exactly zero.

By employing these regularization techniques, we can strike a balance between capturing the patterns in the training data and generalizing well to new data. This fundamental understanding is essential for applying regularization effectively in machine learning models.

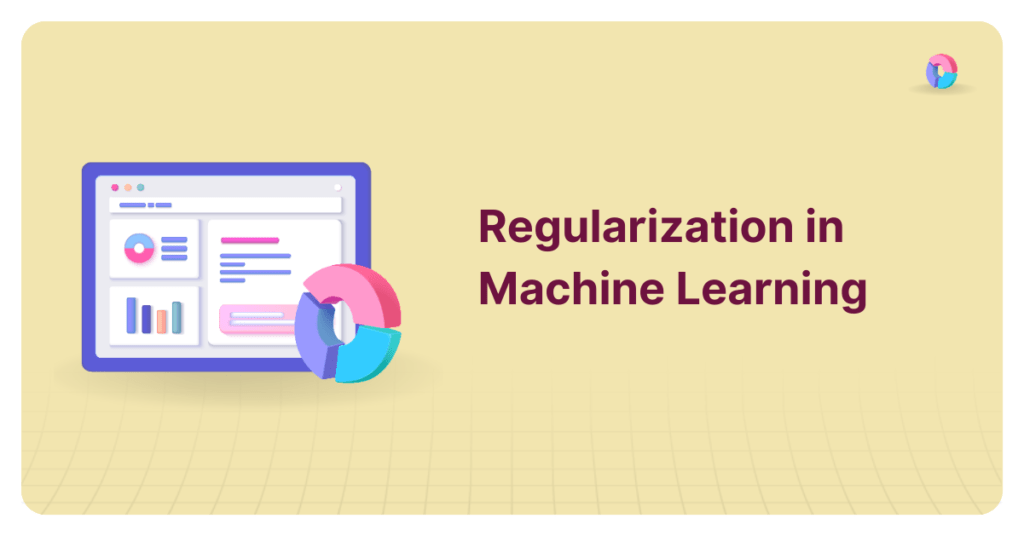

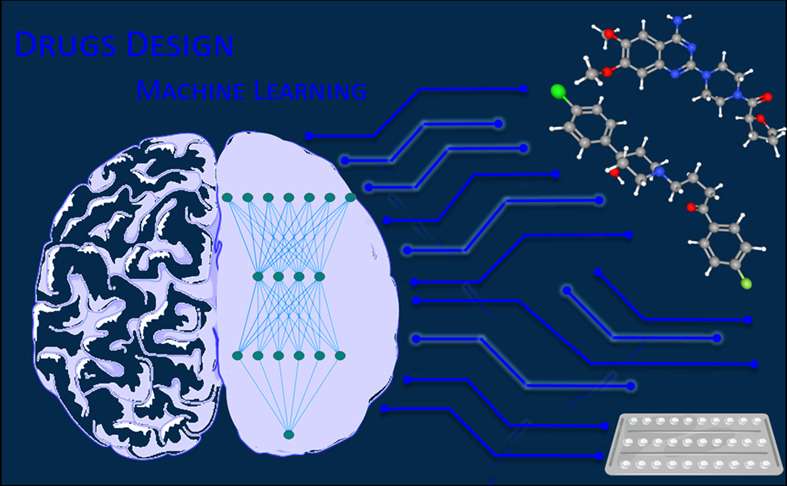

Image by Canva

Different Types of Regularization Techniques in Machine Learning

Regularization techniques play a crucial role in preventing overfitting in machine learning models. But what are the different types of regularization techniques? One popular technique is L1 regularization, also known as Lasso regression. It adds a penalty term based on the absolute value of the coefficients.

This not only reduces the complexity of the model but also performs feature selection by forcing some coefficients to become exactly zero. L1 regularization is especially useful when we have a large number of features and want to identify the most important ones.

Another technique is L2 regularization, also known as ridge regression. It adds a penalty term to the loss function of the model, which acts to shrink the coefficients towards zero. This helps control the model’s complexity and reduces the chances of overfitting.

Elastic Net regularization is a combination of L1 and L2 regularization. It adds both penalties to the loss function, allowing for feature selection while controlling the model’s complexity. Other regularization techniques include dropout regularization, which randomly sets a fraction of the input units to zero during training, and early stopping, which stops the training process when the model starts to overfit.

By understanding and implementing these different types of regularization techniques, machine learning practitioners can improve the performance and generalization of their models, ensuring more accurate predictions and better real-world applications.

Image by Canva

How Regularization Prevents Overfitting in Machine Learning Models

Regularization is a powerful technique in machine learning that can prevent overfitting, ultimately improving the performance and generalization of models. But how exactly does it prevent overfitting?

Regularization achieves this by introducing additional constraints to the model during training. These constraints act as penalties that discourage the model from fitting the training data too closely. By doing so, regularization helps control the model’s complexity and reduces the chances of overfitting.

One way regularization achieves this is through techniques like L2 regularization (ridge regression) and L1 regularization (lasso regression). L2 regularization adds a penalty term that shrinks the coefficients of the features towards zero, while L1 regularization performs feature selection by forcing some coefficients to become exactly zero. Both of these techniques strike a balance between capturing the patterns in the training data and generalizing well to new, unseen data.

Additionally, regularization techniques such as elastic net regularization, dropout regularization, and early stopping provide further ways to prevent overfitting in different scenarios.

Overall, by applying regularization techniques, machine learning models can overcome overfitting challenges, leading to more accurate predictions and better real-world applications. So, don’t underestimate the power of regularization in machine learning.

Image by Canva

Practical Applications of Regularization in Real World Scenarios

Regularization techniques in machine learning have a wide range of practical applications in real-world scenarios. One common application is in the field of finance, where regularization can be used to build models for predicting stock prices or market trends. By preventing overfitting, regularization helps to create models that can accurately predict market behavior and assist in making informed investment decisions.

Another practical application is in the field of healthcare. Regularization techniques can be used to develop models for diagnosing diseases, predicting patient outcomes, or even identifying patterns in medical imaging data. These models can improve the accuracy of diagnoses and treatment plans, ultimately leading to better patient care and outcomes.

Regularization is also extensively used in natural language processing (NLP) tasks such as sentiment analysis, text classification, and machine translation. By preventing overfitting, regularization helps in building robust models that can effectively understand and process text data, enabling applications like chatbots, sentiment analysis tools, and language translation services.

Additionally, regularization techniques are employed in image and video processing tasks, such as object detection, image recognition, and video analysis. These applications benefit from regularization’s ability to prevent overfitting, allowing for accurate identification and analysis of objects or patterns in visual data.

In summary, regularization techniques have practical applications in various fields such as finance, healthcare, natural language processing, and image and video processing. By preventing overfitting, these techniques enhance the performance and generalization of machine learning models, making them indispensable tools in real-world scenarios.

Image by Canva

Pros and Cons of Regularization

Regularization is a powerful technique in machine learning, but like any tool, it has its pros and cons. Let’s take a closer look at both sides of the regularization coin.

On the pro side, regularization helps prevent overfitting, which is a common problem in machine learning models. By adding constraints and penalties, regularization encourages models to generalize well to new, unseen data. This leads to more accurate predictions and better real-world applications. Regularization techniques also help control the complexity of models, making them more interpretable and easier to understand.

However, regularization is not without its drawbacks. One potential con is that it can sometimes introduce bias into the model. By penalizing certain coefficients, regularization may overlook important features or relationships in the data. Additionally, choosing the right regularization technique and tuning the hyperparameters can be a challenging task, requiring expertise and experimentation.

Despite these drawbacks, the benefits of regularization generally outweigh the drawbacks. By effectively preventing overfitting, regularization improves the performance and generalization of machine learning models, making it an essential tool for data scientists and machine learning practitioners.

Image by Canva

Future Trends and Advances in Regularization Techniques

As the field of machine learning continues to evolve and advance, so do the techniques and methods of regularization. Researchers and experts are constantly working on developing new and improved regularization techniques to address the challenges faced in different scenarios.

One future trend in regularization is the exploration of novel penalty functions. While L1 and L2 regularization have been widely used, researchers are investigating alternative penalty functions that can further enhance the performance and generalization of models. These new penalty functions may offer different trade-offs between model complexity and feature selection, allowing for more precise control over regularization.

Another promising trend is the integration of regularization with other machine learning techniques, such as deep learning. Regularization methods that are specifically designed for deep learning architectures are being developed, enabling the regularization of complex deep neural networks and improving their robustness and generalization capabilities.

Furthermore, there is an increasing focus on adaptive regularization techniques. These techniques dynamically adjust the regularization strength based on the complexity and characteristics of the data, allowing for more flexible and adaptive regularization.

As the field progresses, we can expect further advancements in regularization techniques that will enable more accurate predictions, better generalization, and improved performance of machine learning models in various domains. Exciting times lie ahead for the regularization field, and we can look forward to more innovative and effective techniques that push the boundaries of machine learning.

Video by Mısra Turp YouTube Channel

Conclusion: What is Regularization in Machine Learning

In conclusion, what is Regularization in Machine Learning is a fundamental concept in machine learning that plays a crucial role in building robust and generalizable predictive models. By incorporating regularization techniques such as L1, L2, and Elastic Net regularization, machine learning practitioners can effectively prevent overfitting, handle multicollinearity, and improve model generalization.

FAQs

1. Is regularization necessary for all machine learning models?

Regularization is not always necessary, but it’s generally recommended, especially for models with high complexity or limited training data, to prevent overfitting and improve generalization performance.

2. Can regularization techniques eliminate overfitting completely?

While regularization can help mitigate overfitting, it may not eliminate it entirely, especially in cases of highly complex models or noisy datasets. Regularization should be used in conjunction with other techniques such as cross-validation and feature engineering for optimal performance.

3. How do I know which regularization technique to use for my dataset?

The choice of regularization technique depends on various factors, including the dataset’s characteristics, the model’s complexity, and computational considerations. Experimentation and empirical validation are often necessary to determine the most suitable regularization approach for a given problem.

4. Does regularization affect model interpretability?

Regularization can impact model interpretability to some extent, particularly in L1 regularization (Lasso), where it performs feature selection by shrinking coefficients towards zero. However, the trade-off between interpretability and predictive performance should be carefully considered when applying regularization techniques.

5. Can I use regularization with all machine learning algorithms?

While regularization is commonly associated with linear models such as regression and logistic regression, it can also be applied to various other machine learning algorithms, including support vector machines, decision trees, and neural networks, to improve their generalization performance.